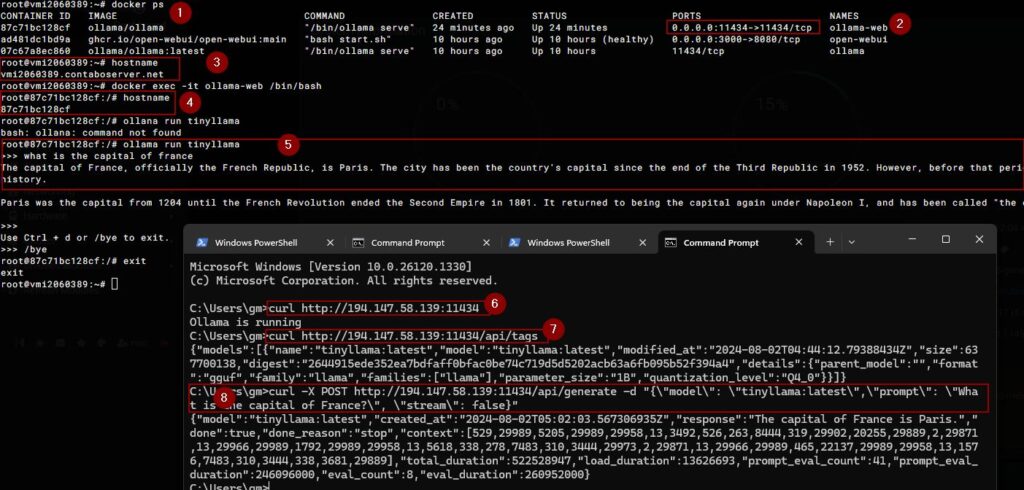

- Using docker, I installed OLLama

- The whole process in a pucture:

OLLama with Docker: Highlights

OLLama is an advanced AI language model designed to provide insightful and contextually relevant responses. Integrating OLLama with Docker offers numerous benefits, streamlining the deployment and scalability of AI applications. This post delves into the key highlights of using OLLama with Docker, showcasing its advantages and practical applications.

What is OLLama?

OLLama is a sophisticated AI model built to understand and generate human-like text based on the input it receives. It excels in various tasks such as:

- Natural language understanding

- Text generation

- Contextual analysis

For more information, you can visit the OLLama official website.

Why Use Docker?

Docker is a platform that enables developers to automate the deployment of applications inside lightweight, portable containers. It offers several benefits:

- Consistency: Ensures that the application runs the same way in different environments.

- Isolation: Keeps applications separated, minimizing conflicts.

- Scalability: Simplifies scaling applications across multiple servers.

Learn more about Docker on their official site.

Combining OLLama with Docker

Integrating OLLama with Docker provides a robust solution for deploying AI models. Here are some key highlights:

Simplified Deployment

- Ease of Setup: Docker containers encapsulate all the dependencies and configurations needed to run OLLama, simplifying the setup process.

- Portability: Docker images can be easily moved across different environments without compatibility issues.

Enhanced Scalability

- Load Balancing: Docker allows for the distribution of workloads across multiple containers, ensuring efficient use of resources.

- Horizontal Scaling: Easily add or remove instances of OLLama to handle varying loads without downtime.

Improved Resource Management

- Isolation: Each Docker container runs in its own isolated environment, preventing resource conflicts.

- Resource Allocation: Docker provides tools to limit the use of CPU and memory, optimizing the performance of OLLama.

Practical Applications

Using OLLama with Docker opens up numerous possibilities in AI-driven applications, such as:

- Chatbots: Deploy intelligent chatbots that can handle customer queries with high accuracy.

- Content Generation: Automate the creation of articles, reports, and other content forms.

- Data Analysis: Analyze and interpret large datasets to derive meaningful insights.

Getting Started

To get started with OLLama and Docker, follow these steps:

- Install Docker: Download and install Docker from the official Docker website.

- Pull OLLama Image: Use the Docker CLI to pull the OLLama image.

docker pull ollama/ollama:latest

- Run OLLama Container: Start a container instance of OLLama.

docker run -d --name ollama_container ollama/ollama:latest

Conclusion

Integrating OLLama with Docker provides a powerful and flexible solution for deploying AI models. It simplifies the deployment process, enhances scalability, and improves resource management. By leveraging these technologies, developers can build more efficient and robust AI-driven applications.

For more details, visit the OLLama documentation and the Docker documentation.